what is few shot prompting

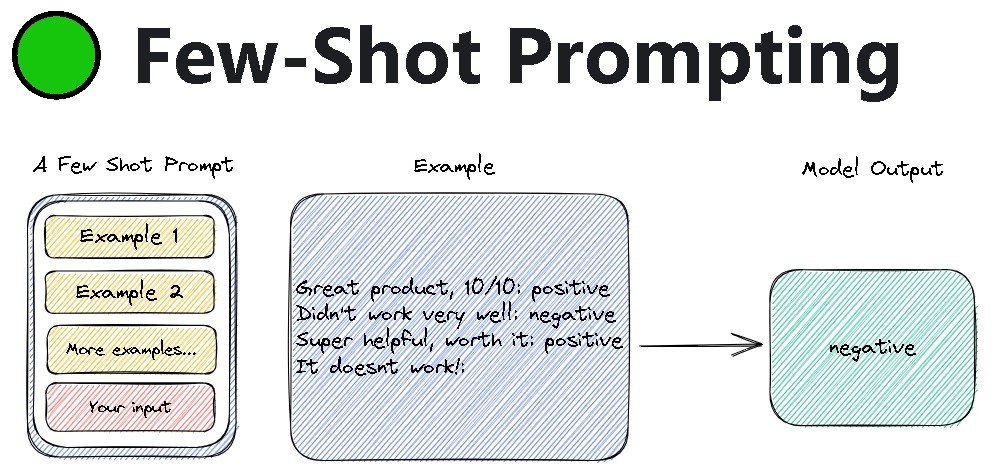

Few shot prompting is a technique in the field of artificial intelligence, particularly in natural language processing (NLP). It involves presenting a language model with a small number of examples to help the model understand and imitate the desired output format. Unlike traditional machine learning methods that require large datasets for training, few shot prompting enables models to learn and adapt to new tasks quickly with minimal data.

Here’s a closer look at what few shot prompting entails:

- Context Learning:

- Few shot prompting leverages the ability of transformer-based models to learn from context. By providing a few examples, the model can infer the pattern or structure of the task and generate similar outputs for new, unseen data.

- Efficient Use of Data:

- In scenarios where data is scarce or expensive to obtain, few shot prompting becomes especially valuable. It allows models to perform well even with a limited number of training examples.

- Flexibility and Adaptability:

- This technique is highly flexible and can be applied to various NLP tasks, such as text classification, sentiment analysis, and question answering. By adjusting the prompts and examples, the model can be adapted to different tasks and domains.

- Human-in-the-Loop:

- Few shot prompting often involves a human-in-the-loop approach, where humans provide the initial examples or feedback to refine the model’s performance. This collaboration between humans and machines can lead to more accurate and reliable results.

In summary, few shot prompting is a powerful technique in artificial intelligence that enables models to learn and adapt to new tasks quickly with minimal data. It leverages the context-learning capabilities of transformer-based models and is highly flexible and adaptable to various NLP tasks. As the field of AI continues to evolve, few shot prompting is likely to play an increasingly important role in advancing the capabilities of language models.

zero shot vs few shot prompting

Zero shot prompting and few shot prompting are both techniques used in natural language processing (NLP) to guide language models in generating specific outputs. Here’s a detailed comparison between the two:

Zero shot Prompting

Definition:

Zero shot prompting involves directly inputting a prompt (a piece of text used to guide the model’s output) without providing any examples, and letting the model generate the corresponding output based on its learned knowledge during training.

Key Features:

- No Examples Needed: Unlike few shot prompting, zero shot prompting does not require any examples to be provided to the model.

- Reliance on Pre-trained Knowledge: The model relies heavily on the knowledge it has acquired during pre-training to understand and process the prompt.

- Flexibility and Quick Application: Zero shot prompting allows for quick and flexible application of models to different tasks without the need for separate data annotation and training for each task.

Advantages:

- Time and Resource Efficient: It reduces the need for data annotation and model training, saving time and computational resources.

- Wide Applicability: Can be used for various types of tasks, including text classification, translation, question answering, and text generation.

Disadvantages:

- Accuracy Issues: Without examples, the model may not always generate highly accurate results, especially for complex tasks or specific domains.

- Context Misunderstanding: The model may sometimes misunderstand the prompt, leading to inaccurate outputs.

Few shot Prompting

Definition:

Few shot prompting involves presenting a small number of examples to the model to help it understand and imitate the desired output format.

Key Features:

- Context Learning: The model learns from the provided examples and adapts to the new task quickly.

- Efficient Use of Data: Allows the model to perform well even with a limited number of training examples.

- Human-in-the-Loop: Often involves human input to provide the initial examples or feedback to refine the model’s performance.

Advantages:

- Improved Accuracy: By providing a few examples, the model can generate more accurate outputs for similar tasks.

- Flexibility and Adaptability: Can be applied to various NLP tasks and adapted to different domains by adjusting the prompts and examples.

Disadvantages:

- Data Requirement: Although the number of examples is small, some specific task data is still required.

- Complexity in Designing Prompts: Designing effective prompts and selecting appropriate examples can be challenging.

Comparison

- Data Usage: Zero shot prompting does not require any examples, while few shot prompting uses a small number of examples.

- Accuracy: Few shot prompting generally achieves higher accuracy than zero shot prompting due to the additional context provided by the examples.

- Applicability: Both techniques can be applied to various NLP tasks, but few shot prompting may be more suitable for tasks requiring higher accuracy or more complex reasoning.

In summary, zero shot prompting and few shot prompting are both valuable techniques in NLP, each with its own set of advantages and disadvantages. The choice between them depends on the specific requirements of the task, the availability of data, and the desired level of accuracy.

Amazon Launches “Haul” to Sell Low-Priced Items to Compete with Temu, Shein

Below is a deeper look at what Haul is, how it works, why Amazon is…

Amazon Logistics Models: A Comprehensive Guide to FBA, FBM, and Third-Party Fulfillment

In the dynamic landscape of e-commerce, logistics plays a pivotal role in determining the success…

A Comprehensive Guide to Commercial Invoices (CI) in Cross-Border Logistics

Discover the essential elements of commercial invoices in cross-border logistics. Our comprehensive guide simplifies the…